Cutting Through the Noise of AI Search Website Optimization

AI Search Has Changed — Here's What Actually Matters

AI search has gone from experimental to mainstream in just about a year. With browsing built into tools like ChatGPT and platforms like Perplexity on the rise, everything’s pointing in one direction: people are getting answers directly from AI, not clicking on blue links.

Some site owners have already seen drops in their usual search traffic. And so the scramble begins: how do you actually optimize for AI search? How do you get your site recommended or quoted in AI answers?

You’ve probably come across terms like llm.txt, structured data, backlinks, AI checklists, new tools, AI algorithms, or AI search metrics — all claiming to be the solution. In this post, we’re cutting through the noise to help you understand what’s real and what’s just industry hype.

How We Got Here

Quick timeline:

- 2023: Google starts testing SGE (Search Generative Experience)

- 2024: Bing leans into AI chat; Perplexity and ChatGPT emerge as alternative search tools

- 2025: Google rolls out AI Overviews globally. Some publishers start losing traffic

What early data shows:

- Fewer clicks from traditional search

- More zero-click answers

- More reliance on summarized content pulled from across the web

Some are already questioning the accuracy of AI responses. Right now, AI models tend to cite forums with strong human input, but they’re also leaning on high-authority websites with lots of backlinks and domain strength. If you’re a smaller site competing with the giants, it can feel like you’re already behind.

The Real Question: What Actually Works?

Let’s be honest, a lot of the advice out there is recycled or speculative. Here's how to tell the difference.

What’s More Hype Than Help:

- 'Optimize for AI search' checklists – usually just SEO basics rebranded

- Tools promising 'LLM rankings' – there’s no standard 'AI rank' system yet

- Prompt injection tricks – trying to manipulate AI responses by keyword stuffing isn’t future-proof

What’s Actually Useful:

- Structured data matters - it helps machines understand and summarize your content

- Alt text, schema, and metadata - they give AI models clarity and context

llm.txt- a proposed protocol similar torobots.txtthat lets you control which bots can crawl your content

What Is llm.txt, Really?

Above is a screenshot from llmstxt.org

- proposed standard for using llm.txt.

So in basic concept: llm.txt is a proposed protocol that lets you tell AI bots whether they can access or use your content, and how to inerpret it.

It’s more of an opt-out mechanism than an optimization one. Not all AI bots respect it yet, and no — it’s not a sitemap or a way to submit to ChatGPT. It just signals bots to stay out.

Should you care? It isn't confirmed that any provider of major LLMs adopted llms.txt yet. Therefore, if you’re trying to grow reach or visibility, don’t expect it to boost it. Right now it is more of an idea than an actual website optimization tactic.

At ilumi, we believe much of the current hype around LLM optimization stems from a desire to control visibility in AI search. But the truth is: it’s still early days. LLMs are rapidly evolving, and many of the tools claiming to offer control over this space are vague at best, more of a tool-market positioning than a proven SEO strategy made to support their users.

Check out this article with John Mueller from Google agreeing on llm.txt not providing any value yet and sharing his test results.

Real Ways to Ensure Websites Readiness for AI Search

These practices actually help and they’re also good for traditional SEO:

- Use structured data: schema.org markup, FAQ blocks, product info, article metadata

- Write answer-focused content: clear summaries, bullet points, and real user questions

- Include image alt text and descriptive filenames for visual context

- Stick to solid technical SEO: fast load times, mobile-friendly layouts, HTTPS, no broken pages, no redirect loops

- Use simple formatting and clean HTML to help LLMs read your content accurately

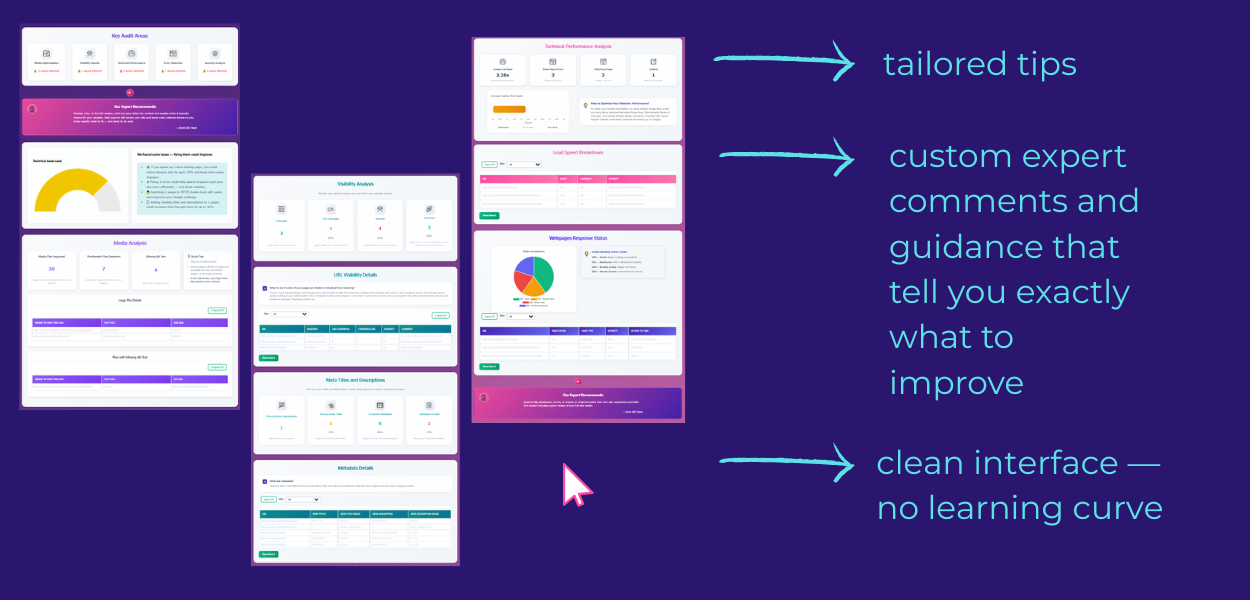

If you're not sure where to start, tools like ilumi can help. Our audits cover all the key points of technical SEO and action recommendations are written by real experts — not autogenerated — so your results are tailored and contextual.

So, What Should You Actually Do?

Don’t panic. Don't fall a victim of overhyped 'AI ranking, optimization' tools promises.

The core idea is simple: your content should be understandable by both people and machines. Keep publishing helpful, honest content. Structure it well. Then double-check that your site is fast, readable, secure, and accessible.

You don’t need to chase every new trend or AI tool. The fundamentals still matter more than the flash.

If you want to skip the overwhelm, just run an ilumi audit. We’ll inspect your site and send back a clear, actionable report in one business day. No subscriptions, no sales calls, no guesswork.